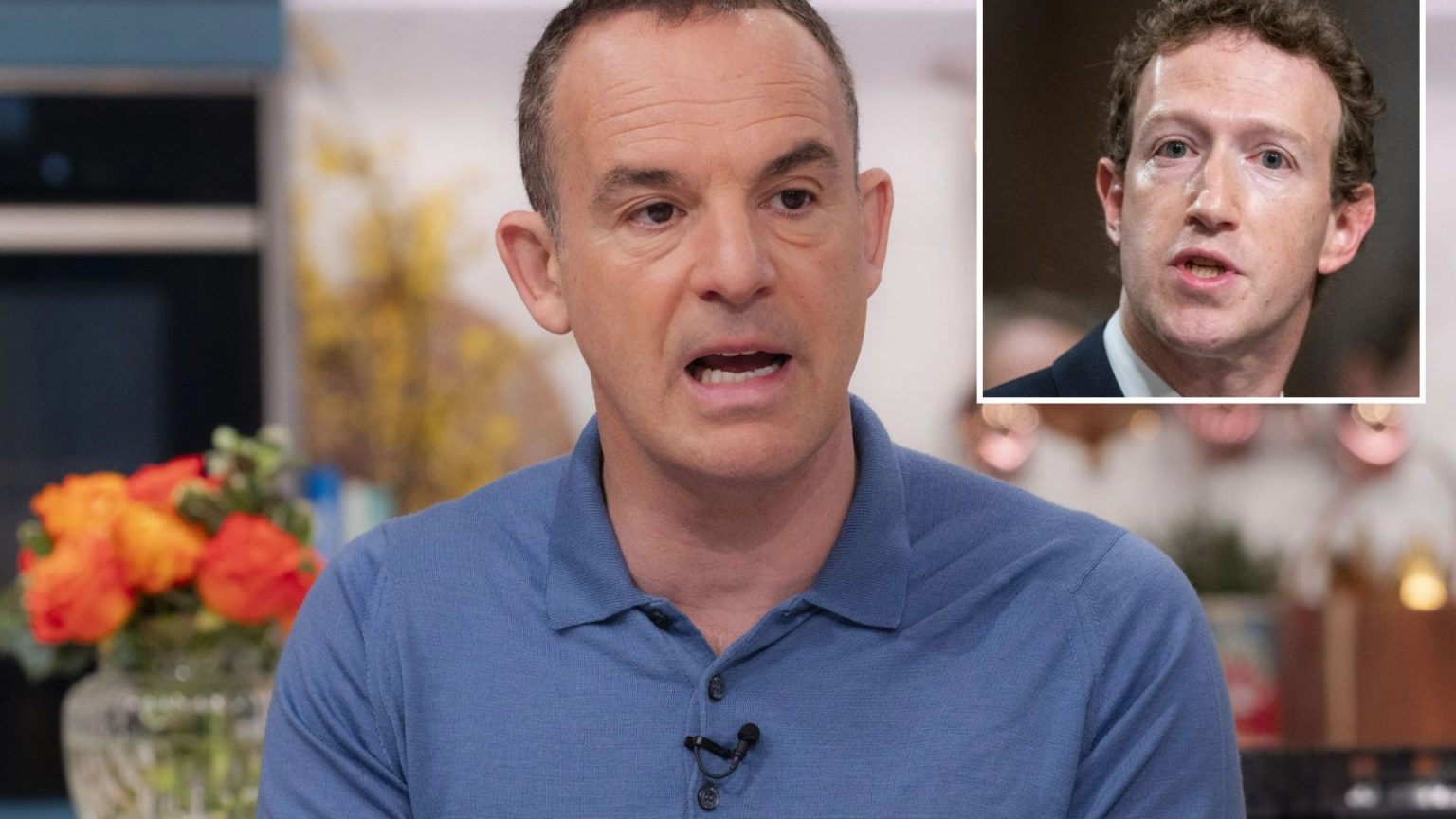

Martin Lewis, the renowned financial expert, has expressed deep concerns over Meta’s recent decision to eliminate its fact-checking program and reduce content moderation across its platforms, including Facebook, Instagram, and Threads. This policy shift, announced by Meta CEO Mark Zuckerberg, raises alarms about the potential proliferation of misinformation and scams, particularly impacting vulnerable users. Lewis, who has previously taken legal action against Facebook over scam advertisements, fears this change could create a fertile ground for fraud and financial exploitation. He questions whether this move will “free up more people to be ripped off,” highlighting the crucial role fact-checkers play in safeguarding users from malicious content and protecting their financial well-being.

Zuckerberg’s decision, seemingly inspired by Elon Musk’s approach to content moderation on X (formerly Twitter), aims to prioritize free speech and reduce what he perceives as politically biased moderation. He argues that the current system often mistakenly removes legitimate content and that community-based notes, similar to those on X, will be a more effective alternative. This shift also involves relocating content moderation teams from California to Texas, a move mirroring Musk’s strategy and raising further concerns about potential bias in content oversight. Zuckerberg’s announcement comes amidst a broader cultural shift towards prioritizing free speech, a trend he believes is reflected in recent election outcomes.

The close relationship between Zuckerberg and President-elect Donald Trump adds another layer of complexity to this situation. Trump, who has returned to social media platforms after a period of suspension, has praised Meta’s policy change as “impressive,” further solidifying the alignment between the two. This connection, along with the appointment of UFC boss Dana White, a close Trump associate, to Meta’s board of directors, underscores the potential influence of political considerations on the company’s content moderation policies.

The move has sparked significant debate about the balance between free speech and the responsibility of social media platforms to protect users from harmful content. Dawn Alford, Executive Director of the Society of Editors, emphasizes the crucial role of traditional media in providing reliable and verified information, particularly in the face of increasing online misinformation. She argues that fact-checking requires expertise, context, and independence, qualities that a decentralized, user-driven model like Meta’s proposed community notes may struggle to guarantee. Alford’s concerns highlight the potential risks of relying on community-based moderation, particularly in complex areas requiring specialized knowledge, such as finance, where misinformation can have severe real-world consequences.

Lewis’s warning, coupled with concerns from media experts, underscores the potential dangers of this policy shift. The removal of professional fact-checkers could leave users vulnerable to a deluge of misinformation, scams, and manipulative content. This is particularly worrying in the financial realm, where misleading information can lead to significant financial losses. The shift towards community-based moderation, while potentially promoting diverse perspectives, raises serious questions about its ability to effectively combat sophisticated scams and misinformation campaigns, especially those targeting vulnerable individuals.

The implications of Meta’s decision extend beyond financial scams. The reduction in content moderation could also lead to a rise in hate speech, harassment, and other forms of harmful content. The absence of professional oversight could create an environment where malicious actors exploit the platform’s reach to spread disinformation and incite harmful actions. The potential consequences are far-reaching, impacting not only individual users but also societal discourse and democratic processes. This policy change represents a critical juncture in the ongoing debate about the role and responsibility of social media platforms in regulating online content and protecting their users from harm. The effectiveness of community-based moderation remains to be seen, and the potential risks warrant close scrutiny and ongoing evaluation.